Julius Hietala

Working on applied ML systems at the intersection of perception, control, and real-time inference. Focused on building ML that runs in production and integrates with software and control stacks.

Currently interested in Vision-Language-Action (VLA) models with real-time controllers in the loop, targeting practical hardware rather than idealized execution.

Projects

Building ML systems that run in production, with a focus on robotics, computer vision, and real-time inference.

Dynamic Cloth Folding Robot

A robot agent for folding pieces of fabric. The paper was a best paper finalist at IROS 2022.

Stray Robots

Stray Robots is a set of tools to help build 3D computer vision datasets and models

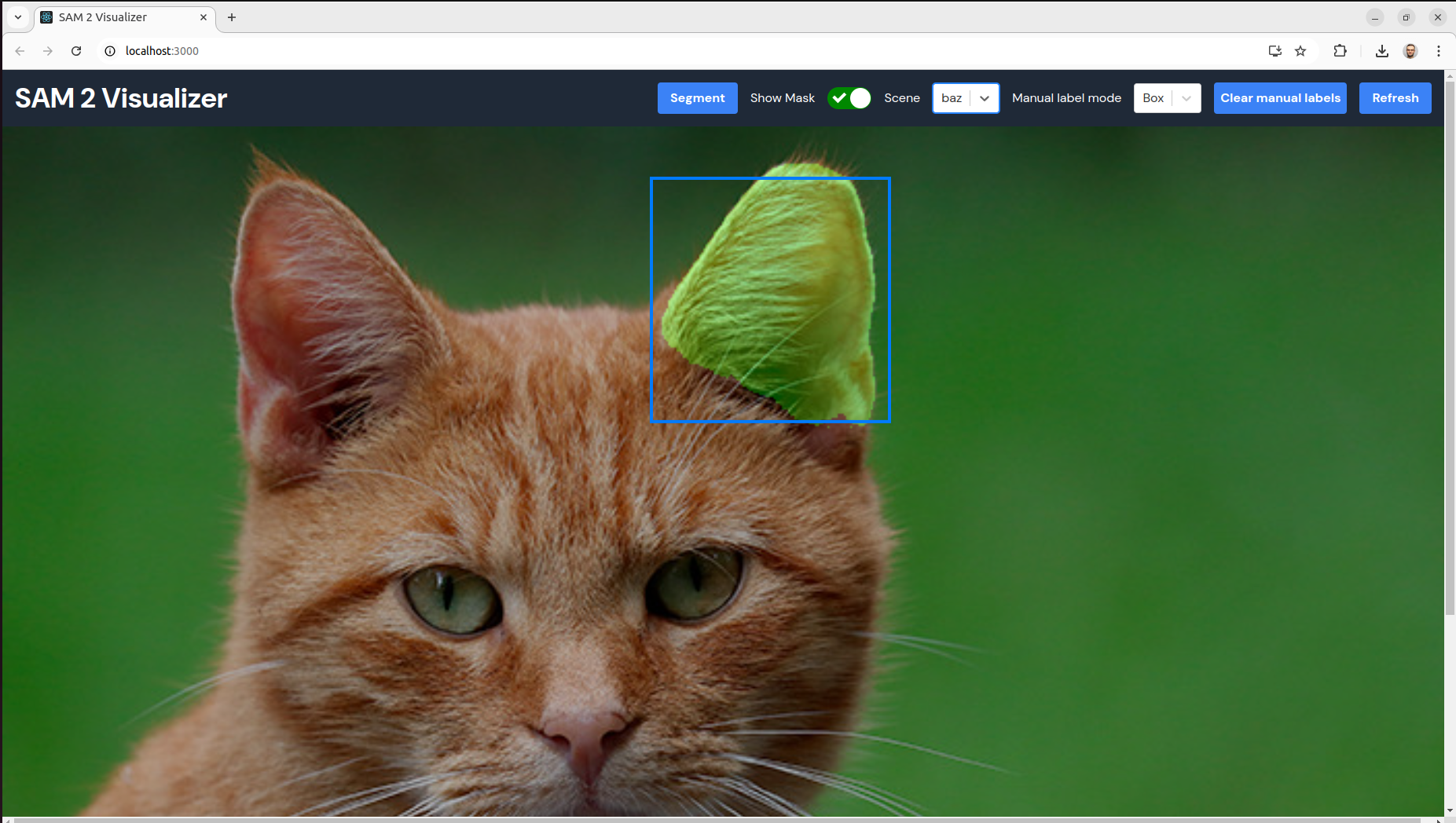

SAM 2 Visualizer

An interactive visualization tool for Facebook's Segment-Anything 2 (SAM2) model. Experiment with SAM2's segmentation capabilities through an intuitive UI.

React Native Llama

A React Native mobile app for running LLaMA language models using ExecuTorch. Run LLaMA models directly on device with a React Native UI.

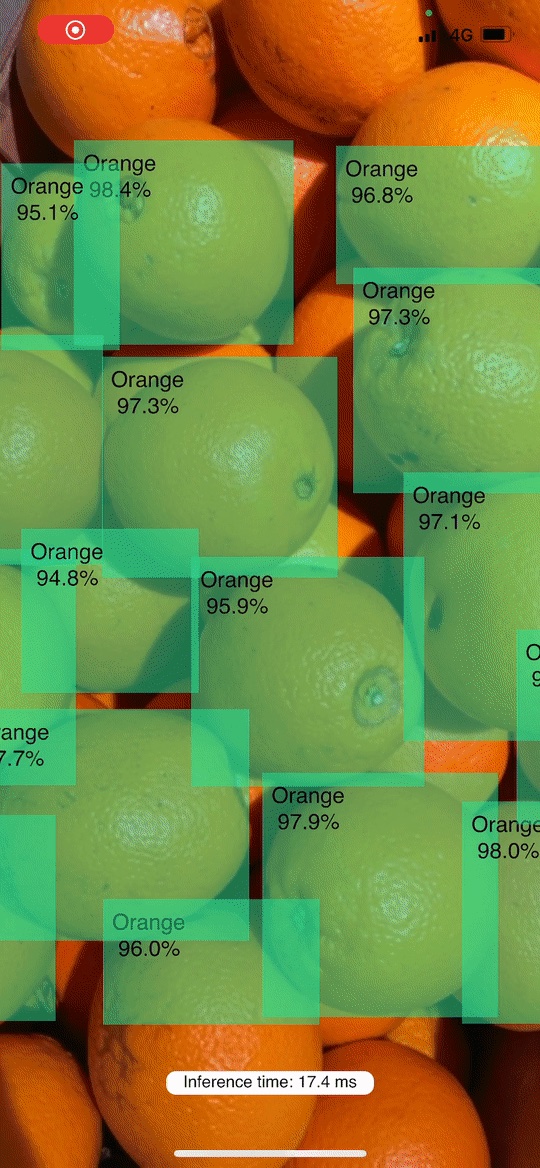

YOLOv5 iOS Object Detector

I built an iOS object detection app with YOLOv5 and Core ML. Check out the tutorial to know how you can build one too!

YOLOv8 Image Classification App

Using Core ML from React Native

crabnet

A simple neural network implemented using Rust 🦀

More on GitHub

Check out more of my open source projects and contributions on GitHub

Skills & Expertise

Technologies and domains I work with to build production ML systems

Core Languages

ML Frameworks

Computer Vision

Systems & Tools

Research Areas

Contact

Interested in collaboration, research discussions, or have questions about my work?